The rapid integration of artificial intelligence into software development environments is introducing a new class of security vulnerabilities, blending traditional weaknesses with novel AI-specific attack vectors. These threats range from misconfigured settings to sophisticated prompt injection attacks, posing significant risks to developers and organizations.

Cursor Editor's Default Configuration Risk

A critical security weakness has been identified in the AI-powered code editor Cursor, a fork of Visual Studio Code. The application ships with the Workspace Trust feature disabled by default. This setting is designed to prevent the automatic execution of code from untrusted sources.

- The Vulnerability: An attacker can create a malicious repository containing a hidden

.vscode/tasks.jsonfile configured withrunOn: 'folderOpen'. When a developer opens this booby-trapped folder in Cursor, the defined task executes automatically, running arbitrary code with the user's privileges. - The Impact: This can lead to credential theft, file modification, and full system compromise, acting as a potent vector for software supply chain attacks.

- Mitigation: Users are advised to enable Workspace Trust in Cursor's settings and to initially open and audit untrusted repositories in a more restricted editor.

The Pervasive Threat of Prompt Injection

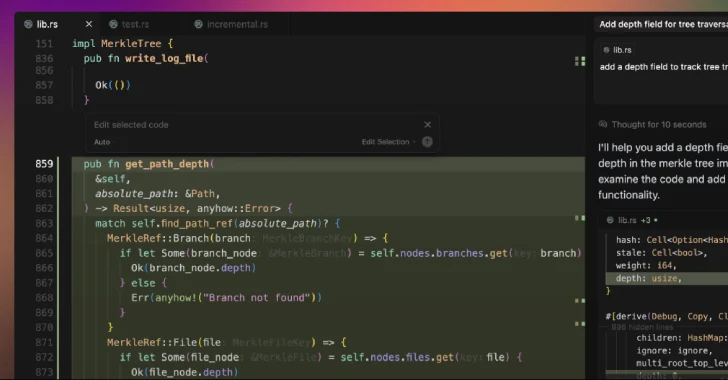

Beyond configuration issues, a more systemic threat is plaguing AI coding assistants like Claude Code, Cline, and Windsurf: prompt injection and jailbreaking.

- Bypassing Security Reviews: Checkmarx demonstrated that Anthropic's Claude Code, which performs automated security reviews, can be tricked via cleverly written comments into approving plainly dangerous code. This allows developers—either malicious or simply seeking to bypass warnings—to push vulnerable code into production.

- Sandbox Escape and Data Exfiltration: Anthropic itself has warned that new features, like file editing in Claude, run in a sandboxed environment but are still susceptible to indirect prompt injections. An attacker can hide instructions in an external file or website that trick the AI into downloading and executing untrusted code or exfiltrating sensitive data from its context (e.g., via Model Context Protocol connections).

- Browser-Based Attacks: AI models integrated into browser extensions, like Claude for Chrome, are also vulnerable. Anthropic noted a significant success rate for these attacks and has implemented defenses to reduce the risk.

Traditional Vulnerabilities in New AI Tools

Perhaps surprisingly, many AI development tools have been found susceptible to classic security flaws, significantly broadening the attack surface:

- Claude Code IDE Extensions: A WebSocket authentication bypass (CVE-2025-52882, CVSS: 8.8) could allow remote command execution by luring a user to a malicious website.

- Postgres MCP Server: An SQL injection vulnerability could allow attackers to bypass read-only restrictions and execute arbitrary SQL commands.

- Microsoft NLWeb: A path traversal flaw enabled remote attackers to read sensitive system files like

/etc/passwdand cloud credential files (.env). - Lovable: An incorrect authorization vulnerability (CVE-2025-48757, CVSS: 9.3) could allow unauthenticated attackers to read from or write to any database table.

- Ollama Desktop: Incomplete cross-origin controls could allow a malicious website to reconfigure the application, intercept chats, and poison AI models in a drive-by attack.

Conclusion: Security as a Foundation

The evolution of AI-powered development introduces a dual-threat landscape: sophisticated new techniques like prompt injection coexist with well-known traditional vulnerabilities. As noted by Imperva, "the most pressing threats are often not exotic AI attacks but failures in classical security controls."

This reality underscores that for the growing ecosystem of AI-driven development platforms, robust security must be treated as a foundational element from the outset, not an afterthought. Defenders must combine classical security hardening—like enabling trust settings and patching known vulnerabilities—with AI-specific safeguards, such as monitoring for anomalous model behavior and sandboxing execution environments.